Future vision: The Argus II series retinal implant, shown here inside an eye, uses an array of 60 electrodes to deliver visual information to a user's brain.

Future vision: The Argus II series retinal implant, shown here inside an eye, uses an array of 60 electrodes to deliver visual information to a user's brain.Retinal implants are designed to replace the function of damaged light-sensing photoreceptor cells in the retina. In particular, they are aimed at treating degenerative diseases such as retinitis pigmentosa and age-related macular degeneration. Using an array of electrodes placed either beneath the retina or on top of it, the devices work by electrically stimulating the remaining cell circuitry in the retina to produce pixel-like sensations of light, called phosphenes, in the visual field.

Peter Walter at the University Eye Clinic at Aachen, who chaired the Artificial Vision symposium in Bonn, Germany, where results from several projects were presented last week, notes that optimistic claims have been made about retinal implants in the past. But he says the success of several long-term studies has given researchers confidence that the remaining challenges are more technological than biological. "Within two or three years we could have products available," Walter says.

Ongoing trials involving one device, the Argus II, a retinal implant developed by Second Sight of Sylmar, CA, have been so promising that the company is already preparing for the market. "We are going to be starting the work to get applications for CE marking in Europe and authorization in the U.S. from the FDA," says Gregoire Cosendai, the company's director of operations for Europe.

In the past it has often been unclear whether the phosphenes seen by patients were due to the implant functioning correctly or to other factors, such as the recovery of photoreceptors triggered by the trauma of surgery--a phenomenon known as the rescue effect. But now that researchers have moved away from acute implantations--implanting and removing the devices during the same surgical procedure--to chronically implanting them, it is possible to test them more rigorously. Such experiments are difficult and time-consuming, but they can establish when the phosphenes are only occurring in the parts of the retina where there are electrodes, says Walter. "If you switch off the device, then this effect disappears," he says.

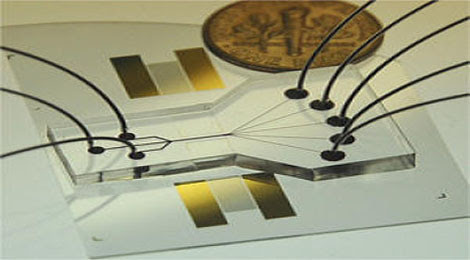

Trials of the Argus II have shown that some limited vision can be restored to blind patients, helping them to recognize objects and make out doorways or roadsides. The first commercial devices will offer this kind of vision, says Cosendai. The Argus II consists of a small chip containing about 60 stimulating electrodes and a glasses-mounted camera that feeds images and power to the implant via a wireless induction loop. Bionic eye: Images are fed to the Argus II implant chip from a camera via a wireless induction loop, with the receiver attached to the outside of the eyeball.

Bionic eye: Images are fed to the Argus II implant chip from a camera via a wireless induction loop, with the receiver attached to the outside of the eyeball.There is hope that the resolution and granularity of these devices can be improved further and that the devices can be made more self-contained. At last week's symposium, Eberhart Zrenner, director of the Institute for Ophthalmic Research at the University of Tübingen, in Germany, presented the results of a trial involving a patient who was able to read eight-centimeter-high letters, albeit with the assistance of a large magnifying device called a dioptre lens. This was achieved using a 3-millimeter-diameter implant made up of roughly 1,500 electrodes, each connected to a photocell. These photocells are used both to sense light and to power the electrodes, which means no external power or camera is needed.

Although Zrenner's device is compact, it is only designed for semichronic implantation, and it is unable to last within the body for long periods of time, says Mark Humayun, a retinal surgeon at the University of Southern California who is involved in the Argus II trials. What's more, Humayun says that reading text has been demonstrated before, albeit with considerably larger letters. "It translates into little useful reading vision, not only because letters are too big, but because it often takes 30 seconds to recognize a single letter," he says.

Cosendai says that, for now, the field is taking small steps and trying not to overstate the potential. Initially, he says, retinal implants will be used to merely help people navigate and orient themselves.

The signal-processing side of these implants remains a key technical challenge, says Cosendai. A patient's brain often needs to be retrained to adapt to the new stimulation.

Rolf Eckmiller, another researcher in the field at the University of Bonn, says that much remains to be done. "Progress has been made, but we have so far underestimated the amount of work involved," he says.

Seeing shapes and edges may help many people become more mobile, Eckmiller says, but it's a big leap to restoring full vision or even the ability to recognize faces or to read. "There's a difference between seeing and recognizing a banana, and seeing something that might be a banana," he says. Currently our understanding of the signals required to make this leap is lacking, he says.

By Duncan Graham-Rowe