For years, researchers have touted graphene as the magic material for the next generation of high-speed electronics, but so far it hasn't proved practical. Now a new way of making nanoscale strips of carbon--the building block of graphene--could kick-start a shift toward superfast graphene components.

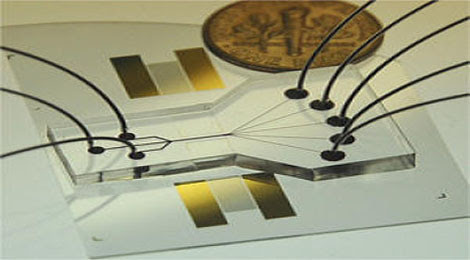

Graphene strips: The zigzag-shaped graphene nanoribbons in this image are a nanometer wide, 50 nanometers long.

The new method, which involves building from the molecular scale up, comes from researchers at the Max Planck Institute for Polymer Research in Germany and Empa in Switzerland. With atomic-level precision, the researchers made graphene nanoribbons about a nanometer wide.

The molecule-thick carbon material called graphene outperforms silicon, which is currently used in electronic components, in every way. It conducts electricity better than silicon, it bends more easily, and it's thinner. Using graphene instead of silicon could lead to faster, thinner, more powerful electronic devices. However, unless graphene sheets are less than 10 nanometers wide and have clean edges, they lack the electronic properties needed before manufacturers can use them for devices like transistors, switches, and diodes--key components in circuitry.

The Swiss team fabricated these skinny graphene strips by triggering molecular-scale chemical reactions on sheets of heated gold. This let the team precisely control the width of the nanoribbons and the shape of their edge. Molecules were arranged into long fibers on the gold surface. When that surface was heated, adjacent strings linked and fused to form ribbon structures about one nanometer across, with a uniform zigzag edge.

"The beauty of that is that it can be done with atomic precision," says Roman Fasel, the corresponding author on the study. "It's not cutting, it's assembling."

Other ways of making nanoribbons involve peeling strips of graphene from a larger sheet, etching them with lithography, or unzipping cylinder-shaped carbon nanotubes. But such nanoribbons are thicker and have random edges.

"In nanoribbons, he who controls the edges wins," says James Tour, a graphene expert at Rice University, who was not involved with the work. "There is no way yet to take a big sheet of graphene and chop it up with this level of control."

"This type of nanoribbon would enrich and open up new possibilities for graphene electronics," says Yu-Ming Lin, a researcher working on graphene-based transistors at the IBM T. J. Watson Research Center in New York.

Graphene nanoribbons are still a long way from practical application, says Tour. "The next step is to make a handful of devices. That's not hard to do the big step is to orient it en masse."

But the success of Fasel and his team's chemical method, Tour says, will encourage more research into fine-tuning the steps so that nanoribbons of this quality can be produced on a large scale. For instance, researchers can now experiment with the finer edge structure and electronic effects of the new nanoribbons, testing theories that, to date, they could only simulate on computers.

"It points the direction rather than being a final result," says Walter de Heer, a researcher at the Georgia Institute of Technology who has developed a way to grow graphene on silicon chips. "It's a first step in a long chain of steps that will lead to graphene electronics."

By Nidhi Subbaraman

From Technology Review