The results, published in the September 15 online edition of Nature, mark a major advance in the use of microscopes for scientific investigation (microscopy). The findings could lead to treatments for disorders such as myotonic dystrophy in which messenger RNA gets stuck inside the nucleus of cells.

Real-Time mRNA Export: Messenger RNA molecules (green structures) passing through the nuclear pore (red) from the nucleus to the cytoplasm.

Robert Singer, Ph.D., professor and co-chair of anatomy and structural biology, professor of cell biology and neuroscience and co-director of the Gruss-Lipper Biophotonics Center at Einstein, is the study's senior author. His co-author, David Grünwald, is at the Kavli Institute of Nanoscience at Delft University of Technology, The Netherlands. Prior to their work, the limit of microscopy resolution was 200 nanometers (billionths of a meter), meaning that molecules closer than that could not be distinguished as separate entities in living cells. In this paper, the researchers improved that resolution limit by 10 fold, successfully differentiating molecules only 20 nanometers apart.

Protein synthesis is arguably the most important of all cellular processes. The instructions for making proteins are encoded in the Deoxyribonucleic acid (DNA) of genes, which reside on chromosomes in the nucleus of a cell. In protein synthesis, DNA instructions of a gene are transcribed, or copied, onto messenger RNA; these molecules of messenger RNA must then travel out of the nucleus and into the cytoplasm, where amino acids are linked together to form the specified proteins.

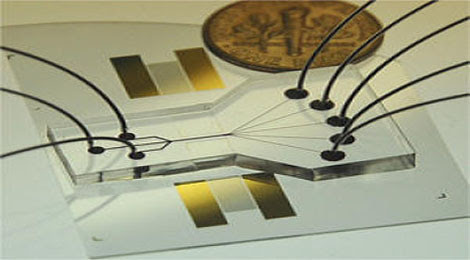

Molecules shuttling between the nucleus and cytoplasm are known to pass through protein complexes called nuclear pores. After tagging messenger RNA molecules with a yellow fluorescent protein (which appears green in the accompanying image) and tagging the nuclear pore with a red fluorescent protein, the researchers used high-speed cameras to film messenger RNA molecules as they traveled across the pores. The Nature paper reveals the dynamic and surprising mechanism by which nuclear pores "translocate" messenger RNA molecules from the nucleus into the cytoplasm: this is the first time their pore transport has been seen in living cells in real time.

"Up until now, we'd really had no idea how messenger RNA travels through nuclear pores," said Dr. Singer. "Researchers intuitively thought that the squeezing of these molecules through a narrow channel such as the nuclear pore would be the slow part of the translocation process. But to our surprise, we observed that messenger RNA molecules pass rapidly through the nuclear pores, and that the slow events were docking on the nuclear side and then waiting for release into the cytoplasm."

More specifically, Dr. Singer found that single messenger RNA molecules arrive at the nuclear pore and wait for 80 milliseconds (80 thousandths of a second) to enter; they then pass through the pore breathtakingly fast -- in just 5 milliseconds; finally, the molecules wait on the other side of the pore for another 80 milliseconds before being released into the cytoplasm.

The waiting periods observed in this study, and the observation that 10 percent of messenger RNA molecules sit for seconds at nuclear pores without gaining entry, suggest that messenger RNA could be screened for quality at this point.

"Researchers have speculated that messenger RNA molecules that are defective in some way, perhaps because the genes they're derived from are mutated, may be inspected and destroyed before getting into the cytoplasm or a short time later, and the question has been, 'Where might that surveillance be happening?'," said Dr. Singer. "So we're wondering if those messenger RNA molecules that couldn't get through the nuclear pores were subjected to a quality control mechanism that didn't give them a clean bill of health for entry."

In previous research, Dr. Singer studied myotonic dystrophy, a severe inherited disorder marked by wasting of the muscles and caused by a mutation involving repeated DNA sequences of three nucleotides. Dr. Singer found that in the cells of people with myotonic dystrophy, messenger RNA gets stuck in the nucleus and can't enter the cytoplasm. "By understanding how messenger RNA exits the nucleus, we may be able to develop treatments for myotonic dystrophy and other disorders in which messenger RNA transport is blocked," he said.

The paper, "In Vivo Imaging of Labelled Endogenous β-actin mRNA during Nucleocytoplasmic Transport," was published in the September 15 online edition of Nature.

From sciencedaily.com