This is an artist's concept of a comet harpoon embedded in a comet. The

harpoon tip has been rendered semi-transparent so the sample collection

chamber inside can be seen.

Scientists at NASA's Goddard Space Flight Center in Greenbelt, Md.

are in the early stages of working out the best design for a

sample-collecting comet harpoon. In a lab the size of a large closet

stands a metal ballista (large crossbow) nearly six feet tall, with a

bow made from a pair of truck leaf springs and a bow string made of

steel cable 1/2 inch thick. The ballista is positioned to fire

vertically downward into a bucket of target material. For safety, it's

pointed at the floor, because it could potentially launch test harpoon

tips about a mile if it was angled upwards. An electric winch

mechanically pulls the bow string back to generate a precise level of

force, up to 1,000 pounds, firing projectiles to velocities upwards of

100 feet per second.

Donald Wegel of NASA Goddard, lead engineer on the project, places a

test harpoon in the bolt carrier assembly, steps outside the lab and

moves a heavy wooden safety door with a thick plexiglass window over the

entrance. After dialing in the desired level of force, he flips a

switch and, after a few-second delay, the crossbow fires, launching the

projectile into a 55-gallon drum full of cometary simulant -- sand,

salt, pebbles or a mixture of each. The ballista produces a uniquely

impressive thud upon firing, somewhere between a rifle and a cannon

blast.

"We had to bolt it to the floor, because the recoil made the whole

testbed jump after every shot," said Wegel. "We're not sure what we'll

encounter on the comet – the surface could be soft and fluffy, mostly

made up of dust, or it could be ice mixed with pebbles, or even solid

rock. Most likely, there will be areas with different compositions, so

we need to design a harpoon that's capable of penetrating a reasonable

range of materials. The immediate goal though, is to correlate how much

energy is required to

penetrate different depths in different materials. What harpoon tip

geometries penetrate specific materials best? How does the harpoon mass

and cross section affect penetration? The ballista allows us to safely

collect this data and use it to size the cannon that will be used on the

actual mission."

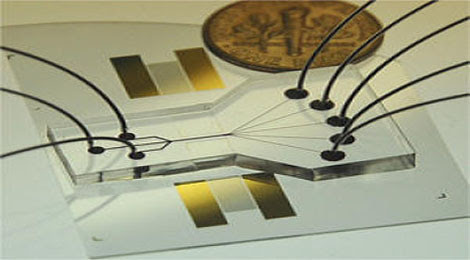

This is a demonstration of the sample collection chamber.

Comets are frozen chunks of ice and dust left over from our solar

system's formation. As such, scientists want a closer look at them for

clues to the origin of planets and ultimately, ourselves. "One of the

most inspiring reasons to go through the trouble and expense of

collecting a comet sample is to get a look at the 'primordial ooze' –

biomolecules in comets that may have assisted the origin of life," says

Wegel.

Scientists at the Goddard Astrobiology Analytical Laboratory have

found amino acids in samples of comet Wild 2 from NASA's Stardust

mission, and in various carbon-rich meteorites. Amino acids are the

building blocks of proteins, the workhorse molecules of life, used in

everything from structures like hair to enzymes, the catalysts that

speed up or regulate chemical reactions. The research gives support to

the theory that a "kit" of ready-made parts created in space and

delivered to Earth by meteorite and comet impacts gave a boost to the

origin of life.

Although ancient comet impacts could have helped create life, a

present-day hit near a populated region would be highly destructive, as a

comet's large mass and high velocity would make it explode with many

times the force of a typical nuclear bomb. One plan to deal with a comet

headed towards Earth is to deflect it with a large – probably nuclear –

explosion. However, that might turn out to be a really bad idea.

Depending on the comet's composition, such an explosion might just

fragment it into many smaller pieces, with most still headed our way. It

would be like getting hit with a shotgun blast instead of a rifle

bullet. So the second major reason to sample comets is to characterize

the impact threat, according to Wegel. We need to understand how they're

made so we can come up with the best way to deflect them should any

have their sights on us.

"Bringing back a comet sample will also let us analyze it with

advanced instruments that won't fit on a spacecraft or haven't been

invented yet," adds Dr. Joseph Nuth, a comet expert at NASA Goddard and

lead scientist on the project.

This is a photo of the ballista testbed preparing to fire a prototype harpoon into a bucket of material that simulates a comet.

Of course, there are other ways to gather a sample, like using a

drill. However, any mission to a comet has to overcome the challenge of

operating in very low gravity. Comets are small compared to planets,

typically just a few miles across, so their gravity is correspondingly

weak, maybe a millionth that of Earth, according to Nuth. "A spacecraft

wouldn't actually land on a comet; it would have to attach itself

somehow, probably with some kind of harpoon. So we figured if you have

to use a harpoon anyway, you might as well get it to collect your

sample," says Nuth. Right now, the team is working out the best tip design, cross-section,

and explosive powder charge for the harpoon, using the crossbow to fire

tips at various speeds into different materials like sand, ice, and rock

salt. They are also developing a sample collection chamber to fit

inside the hollow tip. "It has to remain reliably open as the tip

penetrates the comet's surface, but then it has to close tightly and

detach from the tip so the sample can be pulled back into the

spacecraft," says Wegel. "Finding the best design that will package into

a very small cross section and successfully collect a sample from the

range of possible materials we may encounter is an enormous challenge."

"You can't do this by crunching numbers in a computer, because nobody

has done it before -- the data doesn't exist yet," says Nuth. "We need

to get data from experiments like this before we can build a computer

model. We're working on answers to the most basic questions, like how

much powder charge do you need so your harpoon doesn't bounce off or go

all the way through the comet. We want to prove the harpoon can

penetrate deep enough, collect a sample, decouple from the tip, and

retract the sample collection device."

The spacecraft will probably have multiple sample collection harpoons

with a variety of powder charges to handle areas on a comet with

different compositions, according to the team. After they have finished

their proof-of-concept work, they plan to apply for funding to develop

an actual instrument. "Since instrument development is more expensive,

we need to show it works first," says Nuth.

Currently, the European Space Agency is sending a mission called

Rosetta that will use a harpoon to grapple a probe named Philae to the

surface of comet

"67P/Churyumov-Gerasimenko" in 2014 so that a suite of instruments can

analyze the regolith. "The Rosetta harpoon is an ingenious design, but

it does not collect a sample," says Wegel. "We will piggyback on their

work and take it a step further to include a sample-collecting

cartridge. It's important to understand the complex internal friction

encountered by a hollow, core-sampling harpoon."

NASA's recently-funded mission to return a sample from an asteroid,

called OSIRIS-REx (Origins, Spectral Interpretation, Resource

Identification, Security -- Regolith Explorer), will gather surface

material using a specialized collector. However, the surface can be

altered by the harsh environment of space. "The next step is to return a

sample from the subsurface because it contains the most primitive and

pristine material," said Wegel.

Both Rosetta and OSIRIS-REx will significantly increase our ability

to navigate to, rendezvous with, and locate specific interesting regions

on these foreign bodies. The fundamental research on harpoon-based

sample retrieval by Wegel and his team is necessary so the technology is

available in time for a subsurface sample return mission.

From physorg