ALPHA stored atoms of antihydrogen, consisting of a single negatively charged antiproton orbited by a single positively charged anti-electron (positron). While the number of trapped anti-atoms is far too small to fuel the Starship Enterprise's matter-antimatter reactor, this advance brings closer the day when scientists will be able to make precision tests of the fundamental symmetries of nature. Measurements of anti-atoms may reveal how the physics of antimatter differs from that of the ordinary matter that dominates the world we know today.

Large quantities of antihydrogen atoms were first made at CERN eight years ago by two other teams. Although they made antimatter they couldn't store it, because the anti-atoms touched the ordinary-matter walls of the experiments within millionths of a second after forming and were instantly annihilated -- completely destroyed by conversion to energy and other particles.

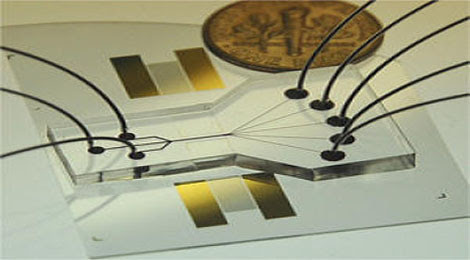

An artist's impression of an antihydrogen atom -- a negatively charged antiproton orbited by a positively charge anti-electron, or positron -- trapped by magnetic fields.

"Trapping antihydrogen proved to be much more difficult than creating antihydrogen," says ALPHA team member Joel Fajans, a scientist in Berkeley Lab's Accelerator and Fusion Research Division (AFRD) and a professor of physics at UC Berkeley. "ALPHA routinely makes thousands of antihydrogen atoms in a single second, but most are too 'hot'" -- too energetic -- "to be held in the trap. We have to be lucky to catch one."

The ALPHA collaboration succeeded by using a specially designed magnetic bottle called a Minimum Magnetic Field Trap. The main component is an octupole (eight-magnetic-pole) magnet whose fields keep anti-atoms away from the walls of the trap and thus prevent them from annihilating. Fajans and his colleagues in AFRD and at UC proposed, designed, and tested the octupole magnet, which was fabricated at Brookhaven. ALPHA team member Jonathan Wurtele of AFRD, also a professor of physics at UC Berkeley, led a team of Berkeley Lab staff members and visiting scientists who used computer simulations to verify the advantages of the octupole trap.

In a forthcoming issue of Nature now online, the ALPHA team reports the results of 335 experimental trials, each lasting one second, during which the anti-atoms were created and stored. The trials were repeated at intervals never shorter than 15 minutes. To form antihydrogen during these sessions, antiprotons were mixed with positrons inside the trap. As soon as the trap's magnet was "quenched," any trapped anti-atoms were released, and their subsequent annihilation was recorded by silicon detectors. In this way the researchers recorded 38 antihydrogen atoms, which had been held in the trap for almost two-tenths of a second.

"Proof that we trapped antihydrogen rests on establishing that our signal is not due to a background," says Fajans. While many more than 38 antihydrogen atoms are likely to have been captured during the 335 trials, the researchers were careful to confirm that each candidate event was in fact an anti-atom annihilation and was not the passage of a cosmic ray or, more difficult to rule out, the annihilation of a bare antiproton.

To discriminate among real events and background, the ALPHA team used computer simulations based on theoretical calculations to show how background events would be distributed in the detector versus how real antihydrogen annihilations would appear. Fajans and Francis Robicheaux of Auburn University contributed simulations of how mirror-trapped antiprotons (those confined by magnet coils around the ends of the octupole magnet) might mimic anti-atom annihilations, and how actual antihydrogen would behave in the trap.

Learning from antimatter

Before 1928, when anti-electrons were predicted on theoretical grounds by Paul Dirac, the existence of antimatter was unsuspected. In 1932 anti-electrons (positrons) were found in cosmic ray debris by Carl Anderson. The first antiprotons were deliberately created in 1955 at Berkeley Lab's Bevatron, the highest-energy particle accelerator of its day.

At first physicists saw no reason why antimatter and matter shouldn't behave symmetrically, that is, obey the laws of physics in the same way. But if so, equal amounts of each would have been made in the big bang -- in which case they should have mutually annihilated, leaving nothing behind. And if somehow that fate were avoided, equal amounts of matter and antimatter should remain today, which is clearly not the case.

In the 1960s, physicists discovered subatomic particles that decayed in a way only possible if the symmetry known as charge conjugation and parity (CP) had been violated in the process. As a result, the researchers realized, antimatter must behave slightly differently from ordinary matter. Still, even though some antiparticles violate CP, antiparticles moving backward in time ought to obey the same laws of physics as do ordinary particles moving forward in time. CPT symmetry (T is for time) should not be violated.

One way to test this assumption would be to compare the energy levels of ordinary electrons orbiting an ordinary proton to the energy levels of positrons orbiting an antiproton, that is, compare the spectra of ordinary hydrogen and antihydrogen atoms. Testing CPT symmetry with antihydrogen atoms is a major goal of the ALPHA experiment.

How to make and store antihydrogen

To make antihydrogen, the accelerators that feed protons to the Large Hadron Collider (LHC) at CERN divert some of these to make antiprotons by slamming them into a metal target; the antiprotons that result are held in CERN's Antimatter Decelerator ring, which delivers bunches of antiprotons to ALPHA and another antimatter experiment.

Wurtele says, "It's hard to catch p-bars" -- the symbol for antiproton is a small letter p with a bar over it -- "because you have to cool them all the way down from a hundred million electron volts to fifty millionths of an electron volt."

In the ALPHA experiment the antiprotons are passed through a series of physical barriers, magnetic and electric fields, and clouds of cold electrons, to further cool them. Finally the low-energy antiprotons are introduced into ALPHA's trapping region.

Meanwhile low-energy positrons, originating from decays in a radioactive sodium source, are brought into the trap from the opposite end. Being charged particles, both positrons and antiprotons can be held in separate sections of the trap by a combination of electric and magnetic fields -- a cloud of positrons in an "up well" in the center and the antiprotons in a "down well" toward the ends of the trap.

To join the positrons in their central well, the antiprotons must be carefully nudged by an oscillating electric field, which increases their velocity in a controlled way through a phenomenon called autoresonance.

"It's like pushing a kid on a playground swing," says Fajans, who credits his former graduate student Erik Gilson and Lazar Friedland, a professor at Hebrew University and visitor at Berkeley, with early development of the technique. "How high the swing goes doesn't have as much to do with how hard you push or how heavy the kid is or how the long the chains are, but instead with the timing of your pushes."

The novel autoresonance technique turned out to be essential for adding energy to antiprotons precisely, in order to form relatively low energy anti-atoms. The newly formed anti-atoms are neutral in charge, but because of their spin and the distribution of the opposite charges of their components, they have a magnetic moment; provided their energy is low enough, they can be captured in the octupole magnetic field and mirror fields of the Minimum Magnetic Field Trap.

Of the thousands of antihydrogen atoms made in each one-second mixing session, most are too energetic to be held and annihilate themselves against the trap walls.

Setting the ALPHA 38 free

After mixing and trapping -- plus the "clearing" of the many bare antiprotons that have not formed antihydrogen -- the superconducting magnet that produces the confining field is abruptly turned off -- within a mere nine-thousandths of a second. This causes the magnet to "quench," a quick return to normal conductivity that results in fast heating and stress.

"Millisecond quenches are almost unheard of," Fajans says. "Deliberately turning off a superconducting magnet is usually done thousands of times more slowly, and not with a quench. We did a lot of experiments at Berkeley Lab to make sure the ALPHA magnet could survive multiple rapid quenches."

From the start of the quench the researchers allowed 30-thousandths of a second for any trapped antihydrogen to escape the trap, as well as any bare antiprotons that might still be in the trap. Cosmic rays might also wander through the experiment during this interval. By using electric fields to sweep the trap of charged particles or steer them to one end of the detectors or the other, and by comparing the real data with computer simulations of candidate antihydrogen annihilations and look-alike events, the researchers were able to unambiguously identify 38 antihydrogen atoms that had survived in the trap for at least 172 milliseconds -- almost two-tenths of a second.

Says Fajans, "Our report in Nature describes ALPHA's first successes at trapping antihydrogen atoms, but we're constantly improving the number and length of time we can hold onto them. We're getting close to the point where we can do some classes of experiments on antimatter atoms. The first attempts will be crude, but no one has ever done anything like them before."

From sciencedaily.com